Big Omega(Ω)

This notation is denoted by 'Ω', and it is pronounced as 'Big Omega'. Big Omega notation defines lower bound for the algorithm. It means the running time of algorithm cannot be less than its asymptotic lower bound for any random sequence of data.

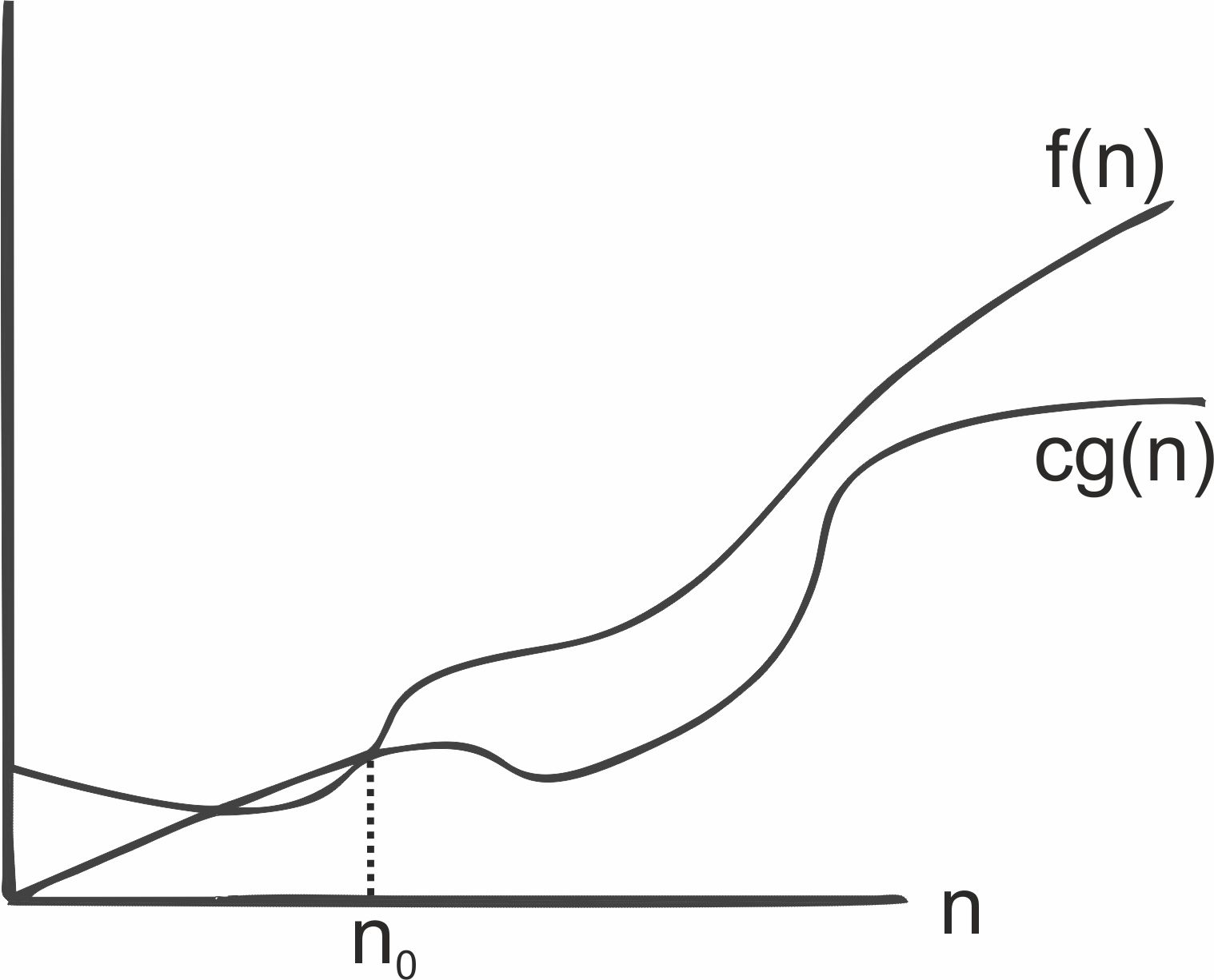

DefinitionThe function f(n) = Ω(g(n)) iff there exist positive constants c and n0 such that f(n) ≥ c*g(n) for all n, n ≥ n0.

Examples:

1. 3n+1 = Ω(n)

as 3n+1 ≥ 3n for all n ≥ 1.

c = 3 & n0 = 1

2. 3n+4 = Ω(n)

as 3n+4 ≥ 3n for all n ≥ 1.

c = 3 & n0 = 1

3. 10n2+4n+2 = Ω(n2)

as 10n2+4n+2 ≥ 10n2 for all n ≥ 1.

c = 10 & n0 = 1

Big Oh and Big Omega notations provide the upper and lower running bounds of the algorithm. These measures tell us that the running time of an algorithm cannot be more or less than their respective bounds. Big Oh notation provides the worst-case running time, whereas Big Omega provides the best-case running time of the algorithm. The running time of the algorithm cannot be better than Big Omega and it cannot be worse than Big Oh.

Next Topic :Big Theta(Θ)